Apple's long-rumored mixed-reality headset may feature iris scanning for authentication, according to reliable analyst Ming-Chi Kuo.

In a recent note to investors, seen by MacRumors, Kuo explained that he has reached the conclusion that Apple's mixed-reality headset may sport iris recognition on the basis of the hardware currently understood to be inside the device.

We are still unsure if the Apple HMD can support iris recognition, but the hardware specifications suggest that the HMD's eye-tracking system can support this function.

If the Apple HMD can support iris recognition, it could provide a more intuitive way for users to use Apple Pay when using the HMD.

Kuo has previously said that Apple's headset will contain 15 camera modules in total. While eight of the 15 camera modules will be used for see-through augmented reality experiences, one module will be used for environmental detection, and six modules will be used for "innovative biometrics." These biometrics could presumably include the iris scanning technology Kuo is referring to, as well as directional eye-tracking.

One practical application presented for iris recognition in the headset is authentication for Apple Pay. Much like how Touch ID or Face ID can provide Apple Pay authentication on other Apple devices, iris scanning may be the equivalent technology for Apple's headset to purchase digital content. Apple purportedly wants to create an App Store for the headset, with a focus on gaming, streaming video content, and video conferencing, within which Apple Pay would likely be integral.

Other than Apple Pay, iris recognition could also be used to unlock the device to prevent unauthorized wearers from using the headset.

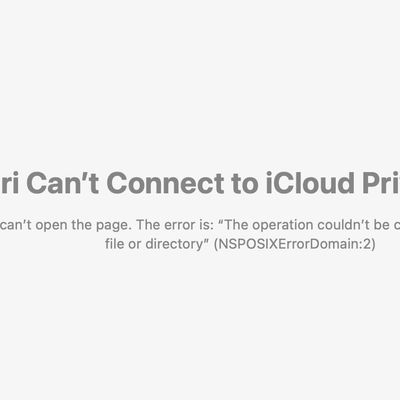

Apple has heavily researched eye-tracking technology, having filed a number of patents around systems to track a user's gaze within a head-mounted display using reflected infrared light.

![]()

Kuo's understanding of the Apple eye-tracking technology is strikingly similar to the system outlined in Apple's patents, with a transmitter and receiver that can detect and analyze eye movement information, providing users with images and information based on algorithms.

Apple's eye-tracking system includes a transmitter and a receiver. The transmitting end provides one or several different wavelengths of invisible light, and the receiving end detects the change of the invisible light reflected by the eyeball, and judges the eyeball movement based on the change.

Kuo says that most head-mounted devices are operated by handheld controllers that can't provide a smooth user experience. He believes that there are several advantages to an eye-tracking system like the one Apple will use, including an intuitive visual experience that interacts seamlessly with the external environment, more intuitive operation that can be controlled with eye movements, and reduced computational burden in the form of a reduced resolution where the user is not looking.

The Information previously said that Apple's headset will feature advanced eye-tracking capabilities along with more than a dozen cameras for tracking hand movements, while Bloomberg explained that the headset will be a "mostly virtual reality device" offering a 3D environment for gaming, watching videos, and communicating. AR functionality will be limited, and Apple plans to include powerful processors to handle the gaming features.

Kuo earlier this month said that Apple would release its mixed reality headset in "mid-2022," with the headset to be then followed by augmented reality glasses in 2025.