Apple has been rumored to be working on advanced speech recognition technology for some time.

After Apple's acquisition of Siri, TechCrunch reported in May that negotiations were ongoing with Nuance to integrate their speech recognition technology into iOS. Earlier this week, 9to5mac detailed much of what is expected to be the major new feature in the next generation iPhone to be release on October 4th. The story was met with some skepticism in our forums, but we were especially confident in the report as we had privately heard the exact same story from our sources.

The Siri/Nuance-based voice recognition Assistant will be the major new feature in the next generation iPhone and will be an iPhone 4S/5 exclusive feature. As detailed, the Assistant will allow users to speak to their iPhones in order to schedule appointments, send text messages, retrieve information and much more. Voice recognition happens on the fly and reportedly with great accuracy.

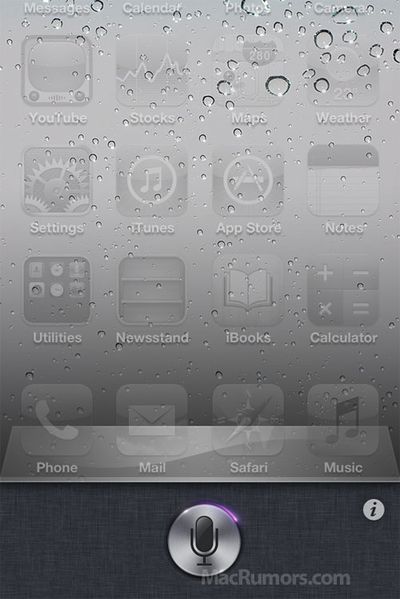

Assistant interface, artist rendition

We've created this artist rendition (above) of what the Assistant interface looks like based on sources with knowledge of the feature. After a long press on the home button, the screen fades and slides up, just like with the multitasking interface. Revealed is a silver icon with an animated orbiting purple flare which indicates a ready state. From what we've been told, this image is a close representation of the actual Assistant interface.

From there, the user may be taken to a conversation view that somewhat mimics Siri's original interface, but in Apple's own styling.

Based on this knowledge, we contracted Jan-Michael Cart to put together a video representation of the Assistant workflow. Cart had been responsible for a number of iOS concept videos in the past, but we wanted to mock up what the actual Assistant interface looked like. Due to the short turnaround, we know that not all elements in the video represent actual look/feel of the Assistant interface, but should show off the general workflow.

After receiving spoken commands, the Assistant shows you back the recognized text and then takes the next step. This could involve sending a text message (with confirmation) or pulling data from Wolfram Alpha. The feature is said to be one of the major differentiators for the next generation iPhone.

Apple is hosting a media event on October 4th, where they will introduce the next iPhone and these new features.