Apple has been awarded a patent by the U.S. Patent and Trademark Office (via AppleInsider) for a digital camera including a refocusable imaging mode adapter, with the document also discussing the potential use of a similar camera system in a device like the iPhone.

The patent details a camera that is able to be configured to operate in a lower-resolution mode that includes refocusing capability in addition to a high-resolution non-refocusable mode, with the camera's body containing an image mode adaptor to switch between the two.

Also cited in the patent is the plenoptic imaging system used in the Lytro light-field camera, which Apple draws inspiration from but points out that its own microlens array can produce higher-quality images because of a higher spatial resolution. Apple also cites the Lytro's camera system as prior art in the patent.

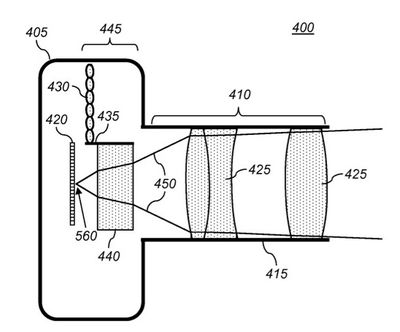

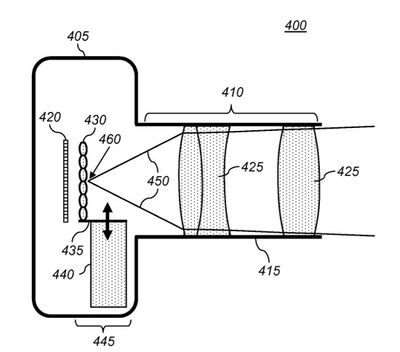

A digital camera system configurable to operate in a low-resolution refocusable mode and a high-resolution non-refocusable mode comprising: a camera body; an image sensor mounted in the camera body having a plurality of sensor pixels for capturing a digital image;

An imaging lens for forming an image of a scene onto an image plane, the imaging lens having an aperture; and an adaptor that can be inserted between the imaging lens and the image sensor to provide the low-resolution refocusable mode and can be removed to provide the high-resolution non-refocusable mode,

The adaptor including a microlens array with a plurality of microlenses; wherein when the adaptor is inserted to provide the low-resolution refocusable mode, the microlens array is positioned between the imaging lens and the image sensor.

Apple's patent outlines how such a lens system could be integrated with a more complete camera solution incorporating image correction and other features, either in a standalone product or within a mobile device.

The Lytro-like technology naturally leads to speculation that it could be used in Apple's rumored standalone point-and-shoot digital camera, which was first rumored in 2012 after Steve Jobs was quoted his biography done by Walter Isaacson stating his desires for the future involved the reinvention of three industries, with one of them being photography. Isaacson's biography also noted that Jobs had met with the CEO of Lytro, although it has been unclear how much direct interest Apple had in Lytro's technology.