Last week, Apple previewed new child safety features that it said will be coming to the iPhone, iPad, and Mac with software updates later this year. The company said the features will be available in the U.S. only at launch.

A refresher on Apple's new child safety features from our previous coverage:

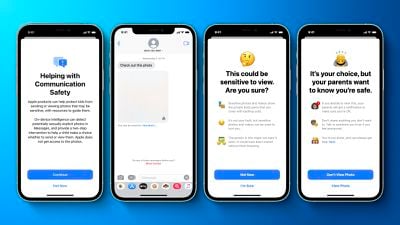

First, an optional Communication Safety feature in the Messages app on iPhone, iPad, and Mac can warn children and their parents when receiving or sending sexually explicit photos. When the feature is enabled, Apple said the Messages app will use on-device machine learning to analyze image attachments, and if a photo is determined to be sexually explicit, the photo will be automatically blurred and the child will be warned.

Second, Apple will be able to detect known Child Sexual Abuse Material (CSAM) images stored in iCloud Photos, enabling Apple to report these instances to the National Center for Missing and Exploited Children (NCMEC), a non-profit organization that works in collaboration with U.S. law enforcement agencies. Apple confirmed today that the process will only apply to photos being uploaded to iCloud Photos and not videos.

Third, Apple will be expanding guidance in Siri and Spotlight Search across devices by providing additional resources to help children and parents stay safe online and get help with unsafe situations. For example, users who ask Siri how they can report CSAM or child exploitation will be pointed to resources for where and how to file a report.

Since announcing the plans last Thursday, Apple has received some pointed criticism, ranging from NSA whistleblower Edward Snowden claiming that Apple is "rolling out mass surveillance" to the non-profit Electronic Frontier Foundation claiming that the new child safety features will create a "backdoor" into the company's platforms.

"All it would take to widen the narrow backdoor that Apple is building is an expansion of the machine learning parameters to look for additional types of content, or a tweak of the configuration flags to scan, not just children's, but anyone's accounts," cowrote the EFF's India McKinney and Erica Portnoy. "That's not a slippery slope; that's a fully built system just waiting for external pressure to make the slightest change."

The concerns extend to the general public, with over 7,000 individuals having signed an open letter against Apple's so-called "privacy-invasive content scanning technology" that calls for the company to abandon its planned child safety features.

At this point in time, it does not appear that any negative feedback has led Apple to reconsider its plans. We confirmed with Apple today that the company has not made any changes as it relates to the timing of the new child safety features becoming available — that is, later this year in updates to iOS 15, iPadOS 15, watchOS 8, and macOS Monterey. With the features not expected to launch for several weeks to months, though, the plans could still change.

Apple sticking to its plans will please several advocates, including Julie Cordua, CEO of the international anti-human trafficking organization Thorn.

"The commitment from Apple to deploy technology solutions that balance the need for privacy with digital safety for children brings us a step closer to justice for survivors whose most traumatic moments are disseminated online," said Cordua.

"We support the continued evolution of Apple's approach to child online safety," said Stephen Balkam, CEO of the Family Online Safety Institute. "Given the challenges parents face in protecting their kids online, it is imperative that tech companies continuously iterate and improve their safety tools to respond to new risks and actual harms."