Apple today previewed new child safety features that will be coming to its platforms with software updates later this year. The company said the features will be available in the U.S. only at launch and will be expanded to other regions over time.

Communication Safety

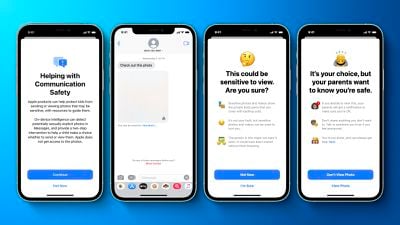

First, the Messages app on the iPhone, iPad, and Mac will be getting a new Communication Safety feature to warn children and their parents when receiving or sending sexually explicit photos. Apple said the Messages app will use on-device machine learning to analyze image attachments, and if a photo is determined to be sexually explicit, the photo will be automatically blurred and the child will be warned.

When a child attempts to view a photo flagged as sensitive in the Messages app, they will be alerted that the photo may contain private body parts, and that the photo may be hurtful. Depending on the age of the child, there will also be an option for parents to receive a notification if their child proceeds to view the sensitive photo or if they choose to send a sexually explicit photo to another contact after being warned.

Apple said the new Communication Safety feature will be coming in updates to iOS 15, iPadOS 15 and macOS Monterey later this year for accounts set up as families in iCloud. Apple ensured that iMessage conversations will remain protected with end-to-end encryption, making private communications unreadable by Apple.

Scanning Photos for Child Sexual Abuse Material (CSAM)

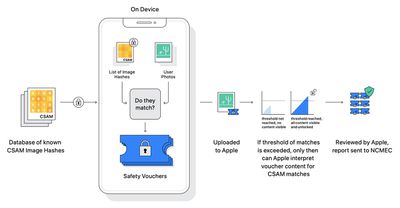

Second, starting this year with iOS 15 and iPadOS 15, Apple will be able to detect known Child Sexual Abuse Material (CSAM) images stored in iCloud Photos, enabling Apple to report these instances to the National Center for Missing and Exploited Children (NCMEC), a non-profit organization that works in collaboration with U.S. law enforcement agencies.

Apple said its method of detecting known CSAM is designed with user privacy in mind. Instead of scanning images in the cloud, Apple said the system will perform on-device matching against a database of known CSAM image hashes provided by the NCMEC and other child safety organizations. Apple said it will further transform this database into an unreadable set of hashes that is securely stored on users' devices.

The hashing technology, called NeuralHash, analyzes an image and converts it to a unique number specific to that image, according to Apple.

"The main purpose of the hash is to ensure that identical and visually similar images result in the same hash, while images that are different from one another result in different hashes," said Apple in a new "Expanded Protections for Children" white paper. "For example, an image that has been slightly cropped, resized or converted from color to black and white is treated identical to its original, and has the same hash."

Before an image is stored in iCloud Photos, Apple said an on-device matching process is performed for that image against the unreadable set of known CSAM hashes. If there is a match, the device creates a cryptographic safety voucher. This voucher is uploaded to iCloud Photos along with the image, and once an undisclosed threshold of matches is exceeded, Apple is able to interpret the contents of the vouchers for CSAM matches. Apple then manually reviews each report to confirm there is a match, disables the user's iCloud account, and sends a report to NCMEC. Apple is not sharing what its exact threshold is, but ensures an "extremely high level of accuracy" that accounts are not incorrectly flagged.

Apple said its method of detecting known CSAM provides "significant privacy benefits" over existing techniques:

• This system is an effective way to identify known CSAM stored in iCloud Photos accounts while protecting user privacy.

• As part of the process, users also can't learn anything about the set of known CSAM images that is used for matching. This protects the contents of the database from malicious use.

• The system is very accurate, with an extremely low error rate of less than one in one trillion account per year.

• The system is significantly more privacy-preserving than cloud-based scanning, as it only reports users who have a collection of known CSAM stored in iCloud Photos.

The underlying technology behind Apple's system is quite complex and it has published a technical summary with more details.

"Apple's expanded protection for children is a game changer. With so many people using Apple products, these new safety measures have lifesaving potential for children who are being enticed online and whose horrific images are being circulated in child sexual abuse material," said John Clark, the President and CEO of the National Center for Missing & Exploited Children. "At the National Center for Missing & Exploited Children we know this crime can only be combated if we are steadfast in our dedication to protecting children. We can only do this because technology partners, like Apple, step up and make their dedication known. The reality is that privacy and child protection can co-exist. We applaud Apple and look forward to working together to make this world a safer place for children."

Expanded CSAM Guidance in Siri and Search

Third, Apple said it will be expanding guidance in Siri and Spotlight Search across devices by providing additional resources to help children and parents stay safe online and get help with unsafe situations. For example, users who ask Siri how they can report CSAM or child exploitation will be pointed to resources for where and how to file a report.

The updates to Siri and Search are coming later this year in an update to iOS 15, iPadOS 15, watchOS 8, and macOS Monterey, according to Apple.

Note: Due to the political or social nature of the discussion regarding this topic, the discussion thread is located in our Political News forum. All forum members and site visitors are welcome to read and follow the thread, but posting is limited to forum members with at least 100 posts.