Apple's 'Hey Siri' Feature in iOS 9 Uses Individualized Voice Recognition

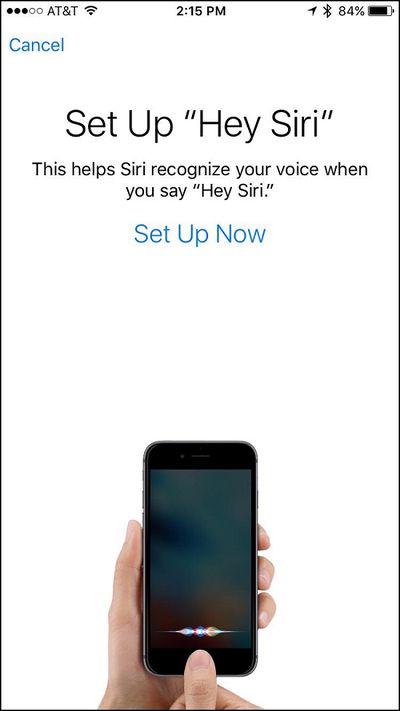

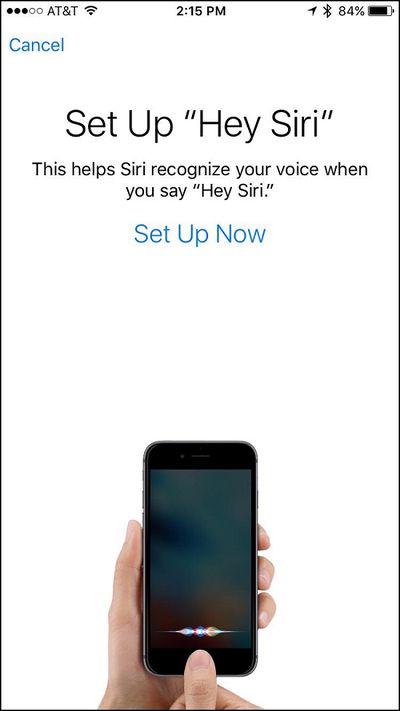

Following the release of the first public beta for iOS 9.1 yesterday, along with the GM version on Wednesday, a few of the testers have come across a new feature introduced in the update. Somewhere in the Settings app, it appears that Apple has quietly added a set-up process for the new "Hey Siri" feature coming to the iPhone 6s and iPhone 6s Plus, thanks to a built-in M9 motion coprocessor that enables the phones' always-on functionality.

Although unconfirmed by Apple, the discovery in iOS 9.1 suggests that Siri will be able to begin detecting specific user voices and determine whether or not the owner of the iPhone in question is speaking to her. Similar in vein to the way Apple aimed its Touch ID feature to work better and better the more you unlocked an iPhone using the fingerprint scanning sensor, it seems the set-up process will guide users into stating words or phrases to better acclimate Siri with each iPhone owner.

Found in General > Siri > Allow 'Hey Siri', the new always-on feature is the next step-up in the technology by Apple, allowing users to ask Siri questions or make changes within the iPhone's apps by simply stating "Hey Siri" near the iPhone. The new set-up process discovered today could also just be a way for Siri to work better detecting voices in general, and not be specific to each user. With the iPhone 6s and iPhone 6s Plus launching in just two weeks, it won't be long until everyone can find out for themselves.

Thanks Alan and Daniel!

Popular Stories

Apple's next-generation iPhone 17 Pro and iPhone 17 Pro Max are less than three months away, and there are plenty of rumors about the devices.

Apple is expected to launch the iPhone 17, iPhone 17 Air, iPhone 17 Pro, and iPhone 17 Pro Max in September this year.

Below, we recap key changes rumored for the iPhone 17 Pro models:Aluminum frame: iPhone 17 Pro models are rumored to have an...

Apple is developing a MacBook with the A18 Pro chip, according to findings in backend code uncovered by MacRumors.

Earlier today, Apple analyst Ming-Chi Kuo reported that Apple is planning to launch a low-cost MacBook powered by an iPhone chip. The machine is expected to feature a 13-inch display, the A18 Pro chip, and color options that include silver, blue, pink, and yellow.

MacRumors...

The upcoming iPhone 17 Pro and iPhone 17 Pro Max are rumored to have a slightly different MagSafe magnet layout compared to existing iPhone models, and a leaked photo has offered a closer look at the supposed new design.

The leaker Majin Bu today shared a photo of alleged MagSafe magnet arrays for third-party iPhone 17 Pro cases. On existing iPhone models with MagSafe, the magnets form a...

The long wait for an Apple Watch Ultra 3 appears to be nearly over, and it is rumored to feature both satellite connectivity and 5G support.

Apple Watch Ultra's existing Night Mode

In his latest Power On newsletter, Bloomberg's Mark Gurman said that the Apple Watch Ultra 3 is on track to launch this year with "significant" new features, including satellite connectivity, which would let you...

Apple is planning to launch a low-cost MacBook powered by an iPhone chip, according to Apple analyst Ming-Chi Kuo.

In an article published on X, Kuo explained that the device will feature a 13-inch display and the A18 Pro chip, making it the first Mac powered by an iPhone chip. The A18 Pro chip debuted in the iPhone 16 Pro last year. To date, all Apple silicon Macs have contained M-series...

Apple today seeded the second betas of upcoming iOS 18.6 and iPadOS 18.6 updates to public beta testers, with the betas coming just a day after Apple provided the betas to developers. Apple has also released a second beta of macOS Sequoia 15.6.

Testers who have signed up for beta updates through Apple's beta site can download iOS 18.6 and iPadOS 18.6 from the Settings app on a compatible...

iOS 26 and iPadOS 26 add a smaller yet useful Wi-Fi feature to iPhones and iPads.

As spotted by Creative Strategies analyst Max Weinbach, sign-in details for captive Wi-Fi networks are now synced across iPhones and iPads running iOS 26 and iPadOS 26. For example, while Weinbach was staying at a Hilton hotel, his iPhone prompted him to fill in Wi-Fi details from his iPad that was already...

Apple hasn't updated the AirPods Pro since 2022, and the earbuds are due for a refresh. We're counting on a new model this year, and we've seen several hints of new AirPods tucked away in Apple's code. Rumors suggest that Apple has some exciting new features planned that will make it worthwhile to upgrade to the latest model.

Subscribe to the MacRumors YouTube channel for more videos.

Heal...

As part of its 10-year celebrations of Apple Music, Apple today released an all-new personalized playlist that collates your entire listening history.

The playlist, called "Replay All Time," expands on Apple Music's existing Replay features. Previously, users could only see their top songs for each individual calendar year that they've been subscribed to Apple Music, but now, Replay All...