Machine Learning

By MacRumors Staff

Machine Learning Articles

Apple Developing AI Tool to Help Developers Write Code for Apps

Apple is working on an updated version of Xcode that will include an AI tool for generating code, reports Bloomberg. The AI tool will be similar to GitHub Copilot from Microsoft, which can generate code based on natural language requests and convert code from one programming language to another.

The Xcode AI tool will be able to predict and finish blocks of code, allowing developers to...

Read Full Article 76 comments

Apple CEO Tim Cook on Generative AI: 'We're Investing Quite a Bit'

During today's earnings call covering the fourth fiscal quarter of 2023, Apple executives held a Q&A session with analysts and investors. Apple CEO Tim Cook was questioned about how Apple might be able to monetize generative AI, which he of course declined to comment on, but he said that Apple is "investing quite a bit" in AI and that there are going to be product advancements that involve...

Apple Experimenting With 'Apple GPT' AI Tool, No Launch Planned Yet

Apple is working on "Apple GPT" artificial intelligence projects that could rival OpenAI's ChatGPT, according to Bloomberg's Mark Gurman. Work on AI has become a priority for Apple over the course of the last few months, as chatbot services and AI functions in apps have proliferated.

The Cupertino company has developed an "Ajax" framework for large language models like ChatGPT, Microsoft's...

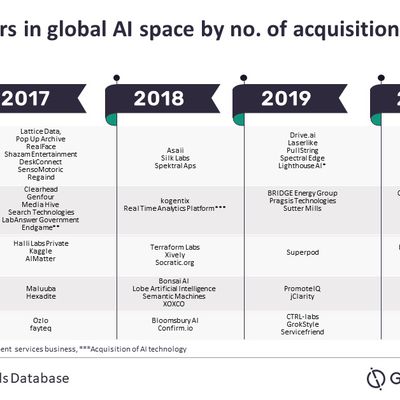

Apple Bought the Most AI Companies From 2016 to 2020

Apple is the leading buyer of companies in the global artificial intelligence space, according to data shared today by GlobalData. From 2016 to 2020, Apple acquired the highest number of AI companies, beating out Accenture, Google, Microsoft, and Facebook, all of whom also had a high number of AI acquisitions.

Over the course of the last several years, Apple has bought companies like...

Apple Acquired Machine Learning Startup Inductiv for Siri Improvements

Apple recently purchased Ontario-based machine learning startup Inductiv for the purpose of improving Siri, reports Bloomberg.

Apple confirmed the purchase with one of its typical acquisition statements: "Apple buys smaller technology companies from time to time and we generally do not discuss our purpose or plans."

Inductiv's engineering team joined Apple after the acquisition to work on ...

Apple Purchases Machine Learning Startup Laserlike

Apple last year acquired Laserlike, a machine learning startup located in Silicon Valley, reports The Information. Apple's purchase of the four-year-old company was confirmed by an Apple spokesperson with a standard acquisition statement: "Apple buys smaller technology companies from time to time and we generally do not discuss our purpose or plans."

Laserlike's website says that its core...

Apple AI Chief John Giannandrea Gets Promotion to Senior Vice President

Apple today announced John Giannandrea, who handles machine learning and AI for the company, has been promoted to the Apple's executive team and is now listed on the Apple Leadership page as a senior vice president.

Giannandrea joined Apple as its chief of machine learning and AI strategy in April 2018, stealing him away from Google where he ran Google's search and artificial intelligence...

Apple Details How HomePod Can Detect 'Hey Siri' From Across a Room, Even With Loud Music Playing

In a new entry in its Machine Learning Journal, Apple has detailed how Siri on the HomePod is designed to work in challenging usage scenarios, such as during loud music playback, when the user is far away from the HomePod, or when there are other active sound sources in a room, such as a TV or household appliances.

An overview of the task:The typical audio environment for HomePod has many...

Apple to Attend World's Largest Machine Learning Conference Next Week

Apple today announced that it will be attending the 2018 Conference on Neural Information Processing Systems, aka NeurIPS, in Montréal, Canada from December 2 through December 8. Apple will have a booth staffed with machine learning experts and invites any conference attendees to drop by and chat.

NeurIPS is in its 32nd year and is said to be the world's largest and most influential machine ...

Apple Details Improvements to Siri's Ability to Recognize Names of Local Businesses and Destinations

In a new entry in its Machine Learning Journal, Apple has detailed how it approached the challenge of improving Siri's ability to recognize names of local points of interest, such as small businesses and restaurants.

In short, Apple says it has built customized language models that incorporate knowledge of the user's geolocation, known as Geo-LMs, improving the accuracy of Siri's automatic...

Apple Updates Leadership Page to Include New AI Chief John Giannandrea

Apple today updated its Apple Leadership page to include John Giannandrea, who now serves as Apple's Chief of Machine Learning and AI Strategy.

Apple hired Giannandrea back in April, stealing him away from Google where he ran the search and artificial intelligence unit.

Giannandrea is leading Apple's AI and machine learning teams, reporting directly to Apple CEO Tim Cook. He has taken...

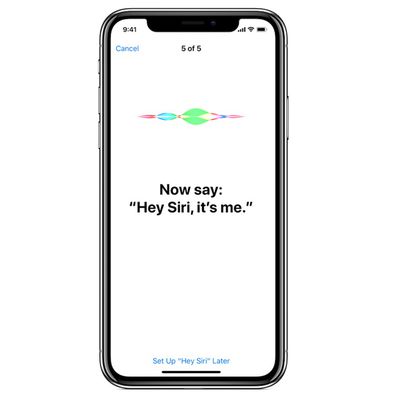

Apple's Latest Machine Learning Journal Entry Focuses on 'Hey Siri' Trigger Phrase

Apple's latest entry in its online Machine Learning Journal focuses on the personalization process that users partake in when activating "Hey Siri" features on iOS devices. Across all Apple products, "Hey Siri" invokes the company's AI assistant, and can be followed up by questions like "How is the weather?" or "Message Dad I'm on my way."

"Hey Siri" was introduced in iOS 8 on the iPhone 6,...

Apple Shares Research into Self-Driving Car Software That Improves Obstacle Detection

Apple computer scientists working on autonomous vehicle technology have posted a research paper online describing how self-driving cars can spot cyclists and pedestrians using fewer sensors (via Reuters).

The paper by Yin Zhou and Oncel Tuzel was submitted to the moderated scientific pre-print repository arXiv on November 17, in what appears to be Apple's first publicly disclosed research on...

Deep Neural Networks for Face Detection Explained on Apple's Machine Learning Journal

Apple today published a new entry in its online Machine Learning Journal, this time covering an on-device deep neural network for face detection, aka the technology that's used to power the facial recognition feature used in Photos and other apps.

Facial detection features were first introduced as part of iOS 10 in the Core Image framework, and it was used on-device to detect faces in photos...

Apple Says 'Hey Siri' Detection Briefly Becomes Extra Sensitive If Your First Try Doesn't Work

A new entry in Apple's Machine Learning Journal provides a closer look at how hardware, software, and internet services work together to power the hands-free "Hey Siri" feature on the latest iPhone and iPad Pro models.

Specifically, a very small speech recognizer built into the embedded motion coprocessor runs all the time and listens for "Hey Siri." When just those two words are detected,...

Apple Updates Machine Learning Journal With Three Articles on Siri Technology

Back in July, Apple introduced the "Apple Machine Learning Journal," a blog detailing Apple's work on machine learning, AI, and other related topics. The blog is written entirely by Apple's engineers, and gives them a way to share their progress and interact with other researchers and engineers.

Apple today published three new articles to the Machine Learning Journal, covering topics that are...

Apple Launches New Blog to Share Details on Machine Learning Research

Apple today debuted a new blog called the "Apple Machine Learning Journal," with a welcome message for readers and an in-depth look at the blog's first topic: "Improving the Realism of Synthetic Images." Apple describes the Machine Learning Journal as a place where users can read posts written by the company's engineers, related to all of the work and progress they've made for technologies in...

Apple Expanding Seattle Hub Working on AI and Machine Learning

Apple will expand its presence in downtown Seattle, where it has a growing team working on artificial intelligence and machine learning technologies, according to GeekWire.

The report claims Apple will expand into additional floors in Two Union Square, and this will allow its Turi team to move into the building and provide space for future employees.“We’re trying to find the best people...

Apple Hires Carnegie Mellon Researcher to Lead AI Team

Carnegie Mellon University professor Russ Salakhutdinov has been hired by Apple to lead a team focused on artificial intelligence, according to a tweet Salakhutdinov sent out this morning. He will continue to teach at Carnegie Mellon, but will also serve as "Director of AI Research" at Apple.

In his tweet, Salakhutdinov says he is seeking additional research scientists with machine learning...

Apple Hiring for New Machine Learning Division Following Turi Acquisition

Following its recent acquisition of Turi, a Seattle-based machine learning and artificial intelligence startup, a pair of new job listings reveal that Apple has spun the company into its new machine learning division.

Apple is looking to hire data scientists and advanced app developers, based in Seattle, who together will help build proof-of-concept apps for multiple Apple products to...