Apple today announced a series of new child safety initiatives that are coming alongside the latest iOS 15, iPadOS 15, and macOS Monterey updates and that are aimed at keeping children safer online.

One of the new features, Communication Safety, has raised privacy concerns because it allows Apple to scan images sent and received by the Messages app for sexually explicit content, but Apple has confirmed that this is an opt-in feature limited to the accounts of children and that it must be enabled by parents through the Family Sharing feature.

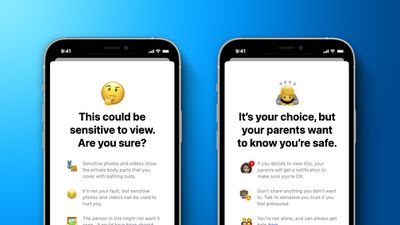

If a parent turns on Communication Safety for the Apple ID account of a child, Apple will scan images that are sent and received in the Messages app for nudity. If nudity is detected, the photo will be automatically blurred and the child will be warned that the photo might contain private body parts.

"Sensitive photos and videos show the private body parts that you cover with bathing suits," reads Apple's warning. "It's not your fault, but sensitive photos and videos can be used to hurt you."

The child can choose to view the photo anyway, and for children that are under the age of 13, parents can opt to get a notification if their child clicks through to view a blurred photo. "If you decide to view this, your parents will get a notification to make sure you're OK," reads the warning screen.

These parental notifications are optional and are only available when the child viewing the photo is under the age of 13. Parents cannot be notified when a child between the ages of 13 and 17 views a blurred photo, though children that are between those ages will still see the warning about sensitive content if Communication Safety is turned on.

Communication Safety cannot be enabled on adult accounts and is only available for users that are under the age of 18, so adults do not need to worry about their content being scanned for nudity.

Parents need to expressly opt in to Communication Safety when setting up a child's device with Family Sharing, and it can be disabled if a family chooses not to use it. The feature uses on-device machine learning to analyze image attachments and because it's on-device, the content of an iMessage is not readable by Apple and remains protected with end-to-end encryption.

Note: Due to the political or social nature of the discussion regarding this topic, the discussion thread is located in our Political News forum. All forum members and site visitors are welcome to read and follow the thread, but posting is limited to forum members with at least 100 posts.