In iOS 15, Apple is introducing a new feature called Live Text that can recognize text when it appears in your camera's viewfinder or in a photo you've taken and let you perform several actions with it.

For example, Live Text allows you to capture a phone number from a storefront with the option to place a call, or look up a location name in Maps to get directions. It also incorporates optical character recognition, so you can search for a picture of a handwritten note in your photos and save it as text.

Live Text's content awareness extends to everything from QR codes to emails that appear in pictures, and this on-device intelligence feeds into Siri suggestions, too.

For instance, if you take a picture that shows an email address and then open the Mail app and start composing a message, Siri's keyboard suggestions will offer up the option to add "Email from Camera" to the To field of your message.

Other Live Text options include the ability to copy text from the camera viewfinder or photos for pasting elsewhere, share it, look it up in the dictionary, and translate it for you into English, Chinese (both simplified and traditional), French, Italian, German, Spanish, or Portuguese.

It can even sort your photos by location, people, scene, objects, and more, by recognizing the text in pictures. For example, searching for a word or phrase in Spotlight search will bring up pictures from your Camera Roll in which that text occurs.

Live Text works in Photos, Screenshot, Quick Look, and Safari and in live previews with Camera. In the Camera app, it's available whenever you point your iPhone's camera at anything that displays text, and is indicated by a small icon that appears in the bottom right corner whenever textual content is recognized in the viewfinder. Tapping the icon lets you tap recognized text and perform an action with it. A similar icon appears in the Photos app when you're viewing a shot image.

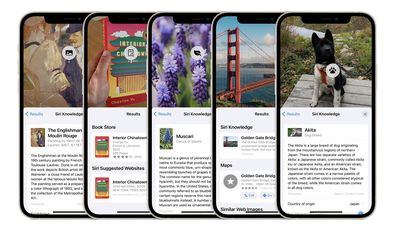

In another neural engine feature, Apple is introducing something called Visual Look Up that lets you take photos of objects and scenes to get more information from them. Point your iPhone's camera at a piece of art, flora, fauna, landmarks, or books, and the Camera will indicate with an icon that it recognizes the content and has relevant Siri Knowledge that can add context.

Since Live Text relies heavily on Apple's neural engine, the feature is only available on iPhones and iPads with at least an A12 Bionic or better chip, which means if you have an iPhone X or earlier model or anything less than an iPad mini (5th generation), iPad Air (2019, 3rd generation), or iPad (2020, 8th generation), then unfortunately you won't have access to it.

The iOS 15 beta is currently in the hands of developers, with a public beta set to be released next month. The official launch of iOS 15 is scheduled for the fall.