Loup Ventures

By MacRumors Staff

Loup Ventures Articles

New Apple Services Could Include 'Podcasts+,' 'Stocks+,' and 'Mail+,' Analysts Predict

A range of new Apple services could include "Podcasts+," "Stocks+," and "Mail+," according to a new report by Loup Ventures analysts.

Apple's subscription service products are increasingly important to its business model and is now almost the size of a Fortune 50 company by revenue, growing by 16 percent in 2020 to $53.7 billion. Loup Ventures highlights that Spotify accumulated 144...

Read Full Article 137 comments

Combined Hardware-Software Subscription Bundles a Logical Step for Apple, Analysts Argue

Apple is positioning itself to launch a combined hardware and software subscription, according to Loup Ventures analysts.

The report makes a compelling argument, based on a range of industry trends, aggregated data, and existing infrastructure, that Apple is in a prime position to launch an all-in-one hardware and software subscription.

Similar to the iPhone upgrade program, we believe, ...

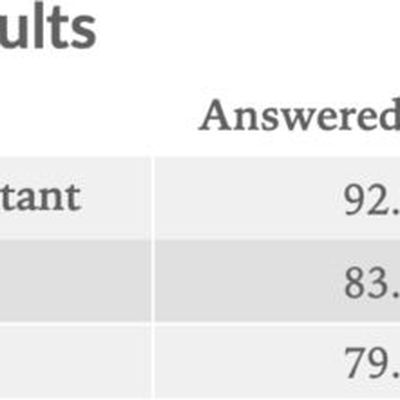

Siri Answers 83% of Questions Correctly in Test, Beating Alexa But Trailing Google Assistant

In an annual test comparing Google Assistant, Siri, and Alexa on smartphones, Loup Ventures' Gene Munster found that Siri was able to correctly answer 83 percent of questions, beating Alexa but trailing behind Google Assistant.

Munster asked each digital assistant 800 questions during the test to compare how each one responded. Alexa answered 79.8 percent of questions correctly, while Google ...

Siri on HomePod Asked 800 Questions and Answered 74% Correctly vs. Just 52% Earlier This Year

Apple analyst Gene Munster of Loup Ventures recently tested the accuracy of digital assistants on four smart speakers by asking Alexa, Siri, Google Assistant, and Cortana a series of 800 questions each on the Amazon Echo, HomePod, Google Home Mini, and Harmon Kardon Invoke respectively.

The results indicate that Siri on the HomePod correctly answered 74.6 percent of the questions, a dramatic ...