Apple today held a questions-and-answers session with reporters regarding its new child safety features, and during the briefing, Apple confirmed that it would be open to expanding the features to third-party apps in the future.

As a refresher, Apple unveiled three new child safety features coming to future versions of iOS 15, iPadOS 15, macOS Monterey, and/or watchOS 8.

Apple's New Child Safety Features

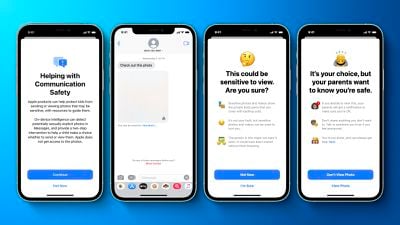

First, an optional Communication Safety feature in the Messages app on iPhone, iPad, and Mac can warn children and their parents when receiving or sending sexually explicit photos. When the feature is enabled, Apple said the Messages app will use on-device machine learning to analyze image attachments, and if a photo is determined to be sexually explicit, the photo will be automatically blurred and the child will be warned.

Second, Apple will be able to detect known Child Sexual Abuse Material (CSAM) images stored in iCloud Photos, enabling Apple to report these instances to the National Center for Missing and Exploited Children (NCMEC), a non-profit organization that works in collaboration with U.S. law enforcement agencies. Apple confirmed today that the process will only apply to photos being uploaded to iCloud Photos and not videos.

Third, Apple will be expanding guidance in Siri and Spotlight Search across devices by providing additional resources to help children and parents stay safe online and get help with unsafe situations. For example, users who ask Siri how they can report CSAM or child exploitation will be pointed to resources for where and how to file a report.

Expansion to Third-Party Apps

Apple said that while it does not have anything to share today in terms of an announcement, expanding the child safety features to third parties so that users are even more broadly protected would be a desirable goal. Apple did not provide any specific examples, but one possibility could be the Communication Safety feature being made available to apps like Snapchat, Instagram, or WhatsApp so that sexually explicit photos received by a child are blurred.

Another possibility is that Apple's known CSAM detection system could be expanded to third-party apps that upload photos elsewhere than iCloud Photos.

Apple did not provide a timeframe as to when the child safety features could expand to third parties, noting that it has still has to complete testing and deployment of the features, and the company also said it would need to ensure that any potential expansion would not undermine the privacy properties or effectiveness of the features.

Broadly speaking, Apple said expanding features to third parties is the company's general approach and has been ever since it introduced support for third-party apps with the introduction of the App Store on iPhone OS 2 in 2008.